Amazon Web Services Feed

AWS ElastiCache for Redis as Application Cache

In the modern cloud computing world, most applications use caching to reduce latency and improve performance for read-heavy workloads. In any application, caching plays an important role and can be applied to various layers such as database, UI layer (Session Management), file system, and operating system.

While implementing caching, it is important to understand whether or not caching particular data will improve performance and reduce load. Issues related to JVM in-memory cache, when used in monolithic applications, are well documented. Since most modern applications are moving toward microservices, a third-party caching service, which will be a good fit with a microservice application, is needed.

Preparing for an AWS certification? Check out our AWS certification training courses!

Traditional In-memory Cache

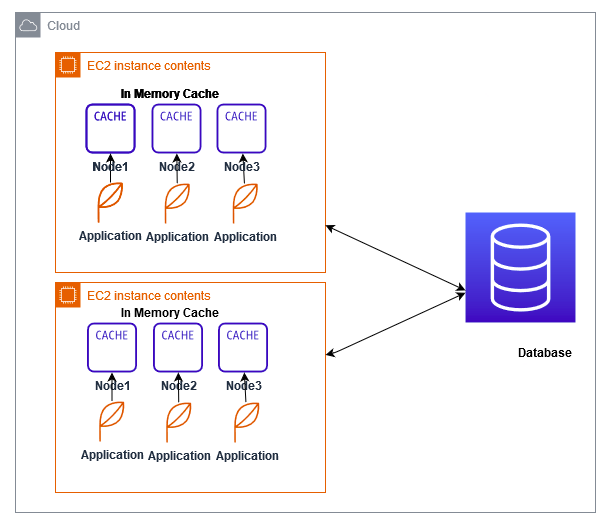

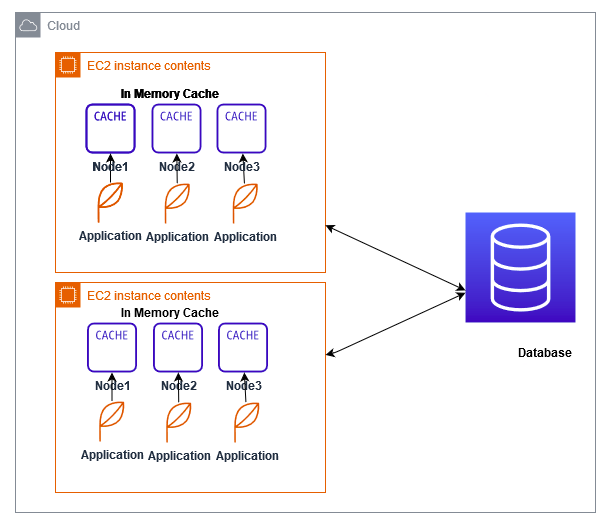

In-memory data caching reduces round trips to a remote server or database. It improves application performance and speeds up response time. It also has a couple of drawbacks, particularly in a microservices architecture. If an organization has multiple microservices sharing the same key/value data, the same data must be cached across the microservices.

With the in-memory cache, the same code must be duplicated across the microservices to cache the data. Creating, updating, or deleting data in the cache of an application running across multiple nodes requires refreshing or restarting all the nodes to reflect the changes.

If the application is using in-memory cache and the data is huge, it will slow down and could possibly suffer from long garbage-collection halts. In-memory cache code is tightly coupled with application code and is not easily reusable for other components. Developers face challenges in rewriting code for each component that shares the same cached data.

New Technologies

Forward-thinking application development organizations in the IT industry have been making waves in moving from monolithic applications to microservices. The key characteristic of a microservices architecture is that each individual service is decoupled from other services and each acts as an independent entity.

Caching is a mandatory requirement for building scalable microservices applications. Industries are moving towards deploying microservice applications in the cloud, creating a need for cloud-based services that support distributed cache and are decoupled from application code. Amazon ElastiCache is a fully managed data store of Amazon Web Services that offers two: Redis and Memcached, in-memory data stores that can be used as cache.

Key Features of the Caching Solution

The caching solution must have the following :

- Integrates easily with application code

- Requires minimal APIs

- Supports distributed cache for a clustered environment

- Supports sharing of the objects across the JVM (in java applications)

- Is scalable

- Is easy to maintain

- Supports various data structures

- Supports persisting data to disk if required

- Reduces the load on the back end

- Enables prediction of the performance of the application based on load

- Reduces database load and cost

- Supports storage of key/value pairs

In-memory cache stores data in key/value format and supports any data structure such as strings, hashes, lists and sets, so a successfully distributed cloud cache service replacement must also store data in key/value format and support any data structure. AWS ElastiCache for Redis meets all the requirements to replace in-memory cache, while Memcached, intended for simpler applications, does not.

Introduction to AWS ElastiCache for Redis

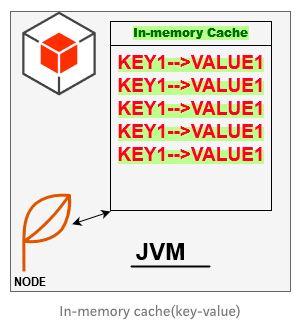

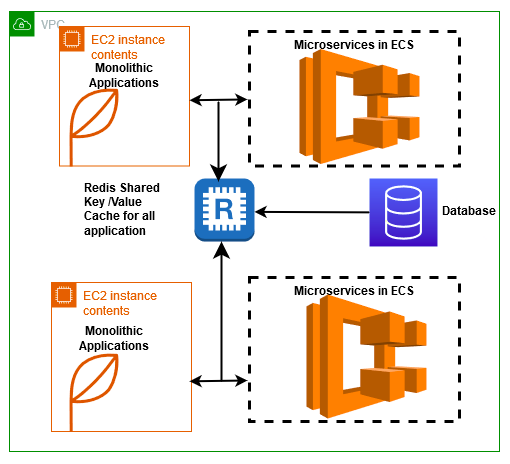

Redis is a great choice to implement a distributed cache that stores and supports flexible data structures in key/value format. Typically, it is an external service, so many microservices applications or monolithic applications can share the same cache or same cluster.

Since it is an external service, it enables scaling for the higher load without impacting or making changes to the application code. Use of Redis cache provides efficient application performance for read and write operations.

Today, most web applications are built on restful web API over HTTP, which requires a low latency response from the back end. Database caching in AWS ElastiCache for Redis allows a dramatic increase of throughput and reduced data retrieval latency.

In the Web application UI layer, a user’s session data can be cached, speeding up the exchange between the user and the web application.

AWS ElastiCache for Redis is developer-friendly and supports most programming languages — C, C#, Java, Go, Python, PHP, and nearly all popular languages.

Why Use AWS Redis Cache?

AWS ElastiCache for Redis has some useful properties, as below:

- Redis is a clustered cache. It can be shared and accessed by multiple applications or multiple microservices, avoiding duplication of the same cache in each application.

- It can persist the data to the disk of the application if desired.

- Redis supports various data structures.

- Redis is a NoSQL database

- It can be highly available because it replicates its activity between master/slave nodes.

- Redis can eliminate garbage collection halt.

- It supports the distribution of data using a Publish/Subscribe paradigm.

- Redis supports Session Store; a session-oriented application can store the user session in the cache.

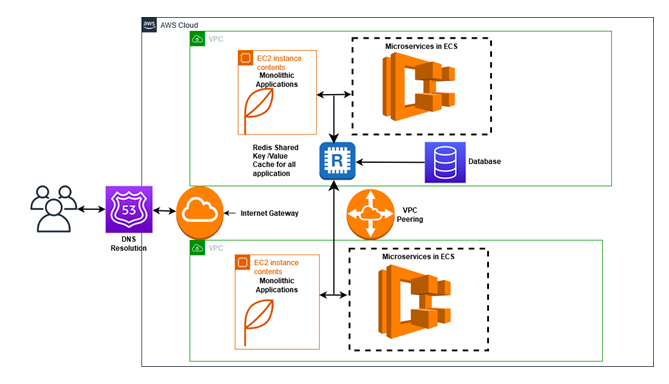

AWS ElastiCache is accessed through the Amazon Elastic Compute Cloud (EC2) instance; it is not open to the internet. So, a Redis instance in an organization or personal account within a VPC can be accessed via ElastiCache from the EC2 instance within the same VPC.

To access the cache from outside the VPC, but within the same Amazon Network or another VPC, VPC peering must be implemented. AWS ElastiCache for Redis has been exposed as a web service that makes it easy to set up, manage and scale cache in the Cloud.

Challenges and Limitations AWS ElastiCache for Redis

The following are challenges posed when using AWS ElastiCache Redis:

- It is a data structure server with commands supports, but no query language.

- Provides only basic security at the instance level (such as access rights).

- Redis is an in-memory store, so all data must fit in memory.

- Because all data is stored in the main memory, there is a risk of data loss from server or process failure.

- Redis offers only 2 options for persistent regular snapshotting and append-only files, neither of which are secure as real transactional servers.

- Connection to Redis cache from a local machine requires an SSH tunnel into the EC2 instance VPC with Redis cache.

- DevOps engineers must ensure security groups are configured and enabled for required microservices or applications needing access to the AWS ElastiCache for Redis service.

Final Words

This article focused on the use of AWS ElastiCache for Redis to provide caching solutions with easy integration for application code. If sharing cache data across applications is required, AWS ElastiCache for Redis enables sharing and accessing data across multiple applications without restart and refresh. It is noteworthy that many improvements are gained by using AWS ElastiCache for Redis in place of, essentially, the same functionality that comes with the in-memory cache.

Note that AWS ElastiCache is an important topic to cover for those preparing for AWS Developer certification. So, if you are preparing for the AWS Certified Developer Associate certification exam, join our AWS Developer Associate training course and get prepared to become a certified AWS professional!

Credit: The content has originally been published on Medium.

The post AWS ElastiCache for Redis as Application Cache appeared first on Whizlabs Blog.