“In today’s rapidly evolving digital landscape, we see a growing number of services and environments (in which those services run) our customers utilize on Azure. Ensuring the performance and security of Azure means our teams are vigilant about regular maintenance and updates to keep pace with customer needs. Stability, reliability, and rolling timely updates remain our top priority when testing and deploying changes. In minimizing impact to customers and services, we must account for the multifaceted software, hardware, and platform landscape. This is an example of an optimization problem, an industry concept that revolves around finding the best way to allocate resources, manage workloads, and ensure performance while keeping costs low and adhering to various constraints. Given the complexity and ever-changing nature of cloud environments, this task is both critical and challenging.

I’ve asked Rohit Pandey, Principal Data Scientist Manager, and Akshay Sathiya, Data Scientist, from the Azure Core Insights Data Science Team to discuss approaches to optimization problems in cloud computing and share a resource we’ve developed for customers to use to solve these problems in their own environments.“—Mark Russinovich, CTO, Azure

Optimization problems in cloud computing

Optimization problems exist across the technology industry. Software products of today are engineered to function across a wide array of environments like websites, applications, and operating systems. Similarly, Azure must perform well on a diverse set of servers and server configurations that span hardware models, virtual machine (VM) types, and operating systems across a production fleet. Under the limitations of time, computational resources, and increasing complexity as we add more services, hardware, and VMs, it may not be possible to reach an optimal solution. For problems such as these, an optimization algorithm is used to identify a near-optimal solution that uses a reasonable amount of time and resources. Using an optimization problem we encounter in setting up the environment for a software and hardware testing platform, we will discuss the complexity of such problems and introduce a library we created to solve these kinds of problems that can be applied across domains.

Environment design and combinatorial testing

If you were to design an experiment for evaluating a new medication, you would test on a diverse demographic of users to assess potential negative effects that may affect a select group of people. In cloud computing, we similarly need to design an experimentation platform that, ideally, would be representative of all the properties of Azure and would sufficiently test every possible configuration in production. In practice, that would make the test matrix too large, so we have to target the important and risky ones. Additionally, just as you might avoid taking two medication that can negatively affect one another, properties within the cloud also have constraints that need to be respected for successful use in production. For example, hardware one might only work with VM types one and two, but not three and four. Lastly, customers may have additional constraints that we must consider in our environment.

With all the possible combinations, we must design an environment that can test the important combinations and that takes into consideration the various constraints. AzQualify is our platform for testing Azure internal programs where we leverage controlled experimentation to vet any changes before they roll out. In AzQualify, programs are A/B tested on a wide range of configurations and combinations of configurations to identify and mitigate potential issues before production deployment.

While it would be ideal to test the new medication and collect data on every possible user and every possible interaction with every medication in every scenario, there is not enough time or resources to be able to do that. We face the same constrained optimization problem in cloud computing. This problem is an NP-hard problem.

NP-hard problems

An NP-hard, or Nondeterministic Polynomial Time hard, problem is hard to solve and hard to even verify (if someone gave you the best solution). Using the example of a new medication that might cure multiple diseases, testing this medication involves a series of incredibly complex and interconnected trials across different patient groups, environments, and conditions. Each trial’s outcome might depend on others, making it not only hard to conduct but also very challenging to verify all the interconnected results. We are not able to know if this medication is the best nor confirm if it is the best. In computer science, it has not yet been proven (and is considered unlikely) that the best solutions for NP-hard problems are efficiently obtainable..

Another NP-hard problem we consider in AzQualify is allocation of VMs across hardware to balance load. This involves assigning customer VMs to physical machines in a way that maximizes resource utilization, minimizes response time, and avoids overloading any single physical machine. To visualize the best possible approach, we use a property graph to represent and solve problems involving interconnected data.

Property graph

Property graph is a data structure commonly used in graph databases to model complex relationships between entities. In this case, we can illustrate different types of properties with each type using its own vertices, and Edges to represent compatibility relationships. Each property is a vertex in the graph and two properties will have an edge between them if they are compatible with each other. This model is especially helpful for visualizing constraints. Additionally, expressing constraints in this form allows us to leverage existing concepts and algorithms when solving new optimization problems.

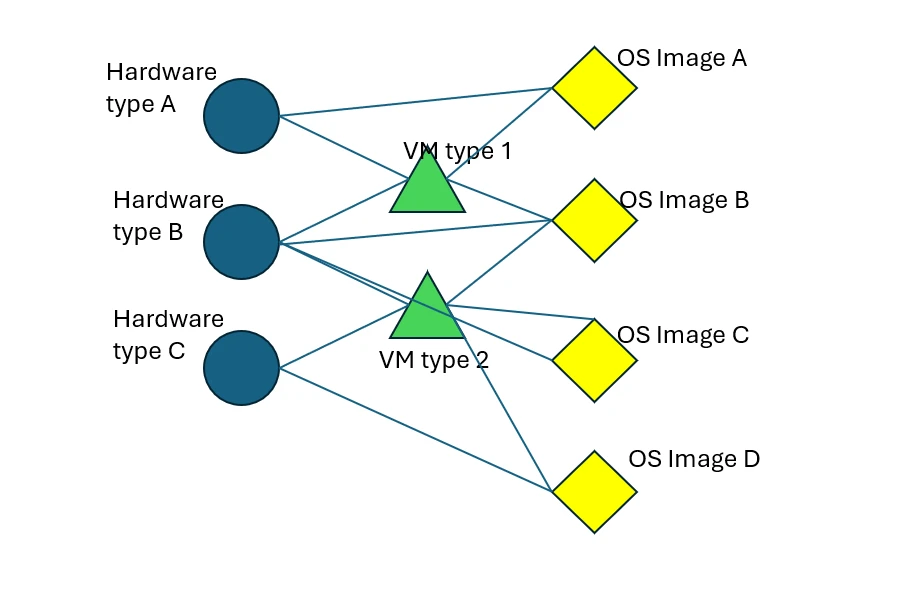

Below is an example property graph consisting of three types of properties (hardware model, VM type, and operating systems). Vertices represent specific properties such as hardware models (A, B, and C, represented by blue circles), VM types (D and E, represented by green triangles), and OS images (F, G, H, and I, represented by yellow diamonds). Edges (black lines between vertices) represent compatibility relationships. Vertices connected by an edge represent properties compatible with each other such as hardware model C, VM type E, and OS image I.

Figure 1: An example property graph showing compatibility between hardware models (blue), VM types (green), and operating systems (yellow)

In Azure, nodes are physically located in datacenters across multiple regions. Azure customers use VMs which run on nodes. A single node may host several VMs at the same time, with each VM allocated a portion of the node’s computational resources (i.e. memory or storage) and running independently of the other VMs on the node. For a node to have a hardware model, a VM type to run, and an operating system image on that VM, all three need to be compatible with each other. On the graph, all of these would be connected. Hence, valid node configurations are represented by cliques (each having one hardware model, one VM type, and one OS image) in the graph.

An example of the environment design problem we solve in AzQualify is needing to cover all the hardware models, VM types, and operating system images in the graph above. Let’s say we’d like hardware model A to be 40% of the machines in our experiment, VM type D to be 50% of the VMs running on the machines, and OS image F to be on 10% of all the VMs. Lastly, we must use exactly 20 machines. Solving how to allocate the hardware, VM types, and operating system images amongst those machines so that the compatibility constraints in Figure one are satisfied and we get as close as possible to satisfying the other requirements is an example of a problem where no efficient algorithm exists.

Library of optimization algorithms

We have developed some general-purpose code from learnings extracted from solving NP-hard problems that we packaged in the optimizn library. Even though Python and R libraries exist for the algorithms we implemented, they have limitations that make them impractical to use on these kinds of complex combinatorial, NP-hard problems. In Azure, we use this library to solve various and dynamic types of environment design problems and implement routines that can be used on any type of combinatorial optimization problem with consideration to extensibility across domains. Our environment design system, which uses this library, has helped us cover a wider variety of properties in testing, leading to us catching five to ten regressions per month. Through identifying regressions, we can improve Azure’s internal programs while changes are still in pre-production and minimize potential platform stability and customer impact once changes are broadly deployed.

Learn more about the optimizn library

Understanding how to approach optimization problems is pivotal for organizations aiming to maximize efficiency, reduce costs, and improve performance and reliability. Visit our optimizn library to solve NP-hard problems in your compute environment. For those new to optimization or NP-hard problems, visit the README.md file of the library to see how you can interface with the various algorithms. As we continue learning from the dynamic nature of cloud computing, we make regular updates to general algorithms as well as publish new algorithms designed specifically to work on certain classes of NP-hard problems.

By addressing these challenges, organizations can achieve better resource utilization, enhance user experience, and maintain a competitive edge in the rapidly evolving digital landscape. Investing in cloud optimization is not just about cutting costs; it’s about building a robust infrastructure that supports long-term business goals.

The post Advancing cloud platform operations and reliability with optimization algorithms appeared first on Microsoft Azure Blog.