Software development is undergoing a transformation. In the old world, building software was a long relay—weeks to plan, months to build, and quarters to launch. In the new world, AI-powered tools turn ideas into prototypes in hours and production solutions in days. To achieve this, developers need an end-to-end platform that seamlessly connects code, collaboration, and cloud. Microsoft is the only company where all three come together, with Visual Studio Code, GitHub, and Azure forming a unified, AI-native development experience designed to empower every developer to shape the future with AI. We’re delivering a full-stack AI platform with built-in security and trust—giving you everything you need to build and run AI-powered apps and agents, from cloud to edge.

In just two years, we’ve gone from early foundation models to AI agents capable of complex workflows. Microsoft Azure AI Foundry has already grown to support more than 70,000 customers, processing 100 trillion tokens last quarter, and powering 2 billion daily enterprise search queries. What began as an application layer has now become a full-stack platform for building intelligent agents that deliver real business value.

To help these AI agents become even more powerful and handle more complex tasks, they need state-of-the-art models, integrated tools, and built-in governance. Today at Microsoft Build 2025, we’re unveiling 10 major innovations in Azure AI Foundry to make this possible.

1. New models

We’re expanding our catalog with cutting-edge models to give developers more choice. This includes Grok 3 from xAI available today, Flux Pro 1.1 from Black Forest Labs coming soon, and Sora coming soon in preview via Azure OpenAI in Foundry Models. We now have over 10,000 open-source models from Hugging Face available in Foundry Models. We support full fine-tuning (including LoRA/QLoRA and DPO), so you can tailor fine-tunable models to your needs. Additionally, we’re rolling out a new developer tier for fine-tuning—no hosting fees, just a simple way to experiment and evaluate fine-tuning methods without the overhead.

2. Smarter model system

Choosing the right model for each task is now easier. Our new model router automatically selects the optimal Azure OpenAI model for your prompt—boosting quality while reducing costs. Starting next month, we’re extending reserved capacity across Azure OpenAI and select Foundry Models (like models from Black Forest Labs, DeepSeek, Mistral, Meta, and xAI), so you get consistent performance even under heavy load. All these models will be accessible through a unified API and Model Context Protocol (MCP) server for Azure AI Foundry models, making it seamless to go from prototype to production.

3. Azure AI Foundry Agent Service

Azure AI Foundry Agent Service is now generally available, empowering you to design, deploy, and scale production-grade AI agents with ease. More than 10,000 organizations—including leaders like Heineken, Carvana, and Fujitsu—have already used Azure AI Foundry to automate complex business processes with their own data and knowledge. This fully managed service takes care of infrastructure and orchestration, and it comes with ready-to-use templates, actions, and connectors to more than 1,400 enterprise data sources (such as SharePoint, Microsoft Fabric, and third-party systems), speeding up development of context-aware agents. And with a few clicks, you can deploy your agents into Microsoft 365 (Microsoft Teams and Office apps) or other platforms like Slack and Twilio—bringing agents directly into the tools your employees use every day.

4. Multi-agent orchestration

Real-world workflows often require multiple agents working together. Azure AI Foundry now makes that easy, across any cloud. Agents can call each other as tools (connected agents), passing tasks between specialized agents to solve complex problems collaboratively. New multi-agent workflows provide a stateful layer to manage context, handle errors, and maintain long-running processes—great for scenarios like financial approvals or supply chain operations. We’ve also implemented open interoperability standards—such as Agent-to-Agent (A2A) communication, to enable different agents to exchange information and coordinate tasks, and the Model Context Protocol (MCP) to enable agents to share and interpret contextual data consistently. These standards ensure agents can collaborate seamlessly across Azure, AWS, Google Cloud, and on-premises environments. Under the hood, we are unifying our Semantic Kernel and AutoGen frameworks to support this seamless agent orchestration.

And because Azure AI Foundry powers Microsoft Copilot Studio, you maintain a continuous loop—from model selection and fine-tuning to deploying pre-built agents. For instance, Stanford Medicine is already using our healthcare agent orchestrator in Azure AI Foundry alongside Microsoft Copilot Studio to streamline tumor-board meetings with custom clinical workflows.

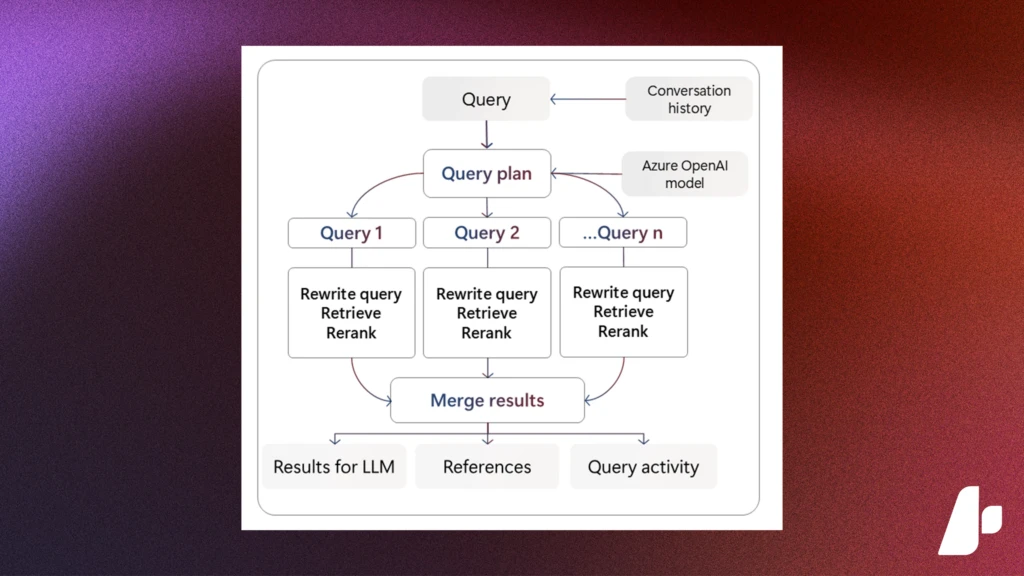

5. Agentic retrieval

We’re making information retrieval smarter to support these advanced agents. Agentic retrieval in Azure AI Search is a new multi-turn query engine designed for complex questions. It uses conversation context and an embedded LLM to break down user queries into sub-queries, runs multiple searches in parallel, and then compiles a comprehensive answer with citations. In early tests, this approach improves answer relevance by up to ~40% on complex, multi-part questions. Now in public preview, agentic retrieval lets your agents connect to enterprise data more effectively using advanced retrieval for accurate, grounded answers.

6. Always-on observability

To hold agents accountable in production, you need visibility into their performance. We’re previewing new Foundry Observability features that provide end-to-end monitoring and diagnostics. You’ll get built-in metrics on latency, throughput, usage, and quality—plus detailed trace logs of each agent’s reasoning steps and tool calls. During development, our Agents Playground now shows evaluation benchmarks and traces to help you refine prompts and logic. As you move to CI/CD, we offer integrations with GitHub and Azure DevOps to incorporate tests and guardrails. And in production, a unified dashboard (integrated with Azure Monitor) gives you real-time insight and alerts for your models and agents.

7. Enterprise-grade identity for agents

Microsoft Entra Agent ID is the first step in managing agent identities in your organization, giving you full visibility and control over what your AI agents can do. Microsoft Entra Agent ID assigns a unique, first-class identity to every agent you build with Azure AI Foundry or Microsoft Copilot Studio. This means your AI agents get the same identity management as human users—appearing in your Microsoft Entra directory so you can set access controls and permissions for each agent. Soon, security admins will be able to apply Conditional Access policies, multi-factor authentication, and least-privilege roles to agents, and monitor their sign-in activities. If an agent shouldn’t access a resource, it will be blocked just like a regular user would.

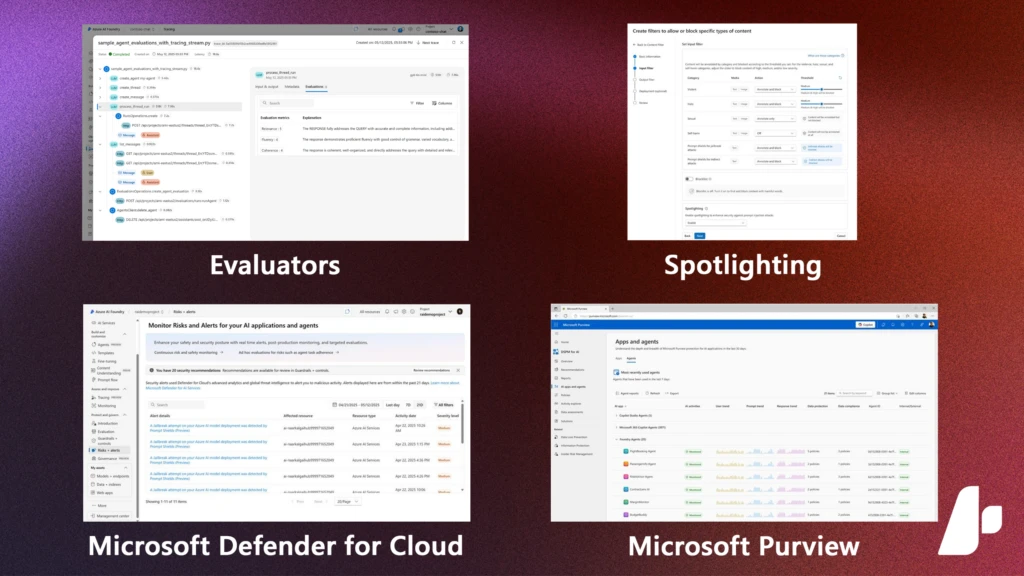

8. Trustworthy AI built-in

Responsible AI is non-negotiable for us and our customers. We’ve added new capabilities to discover, protect, and govern AI systems from the start. Agent Evaluators now automatically check if an agent is following user intent and using tools correctly, flagging issues for developers. An AI Red Teaming Agent constantly probes your agents for vulnerabilities or biases, so you can fix weaknesses before deployment. Our content filtering has gotten smarter with “Spotlighting”—an enhancement to Prompt Shields that better detects and mitigates malicious prompt injections (whether from users or incoming data). We’ve also enabled enhanced guardrails to prevent agents from revealing sensitive information (PII) or straying off approved tasks. On the security side, Azure AI Foundry integrates with Microsoft Defender for Cloud to raise alerts when threats occur. And to help with compliance, we offer out-of-box integration with governance tools like Credo AI, Saidot, and Microsoft Purview for tracking model performance, fairness, and regulatory requirements. From day one, Azure AI Foundry equips you with the safety, security, and governance tools to build AI you (and your users) can trust.

9. Foundry Local

Not all AI needs to run in the cloud—sometimes the best solution is at the edge. Foundry Local is a new runtime for Windows and Mac for AI models and agents. With Foundry Local, you can build cross-platform AI apps that work offline, keep sensitive data locally, and reduce bandwidth costs. This opens scenarios like manufacturing on a factory floor with spotty internet, or field service apps that need AI in remote areas. We are also integrating Foundry with Azure Arc. With the upcoming Azure Arc and Foundry integration, you can manage and update on-device AI deployments centrally. In short, Foundry becomes your AI factory—delivering generative AI where your data is, whether that’s in the cloud or on-premises.

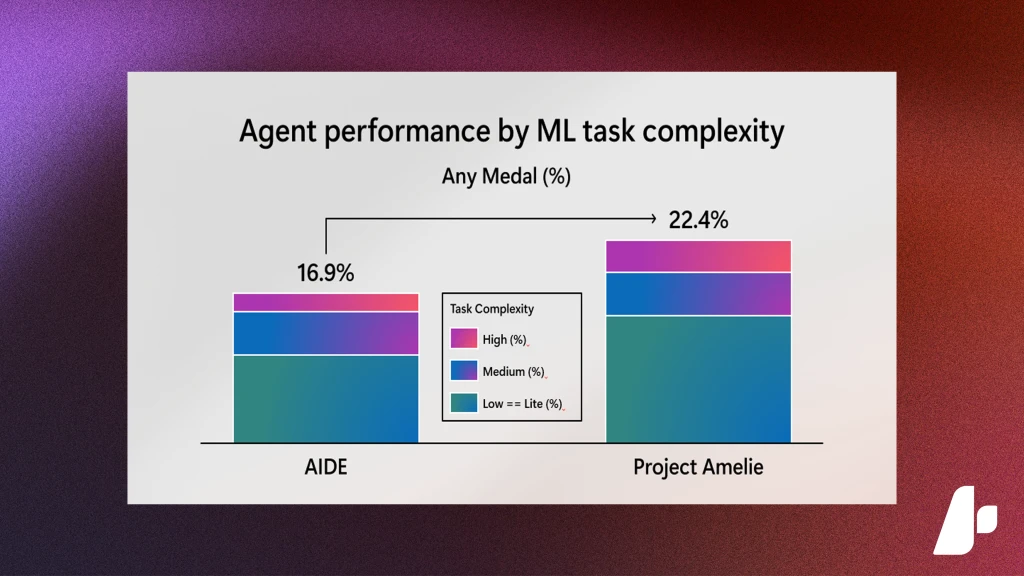

10. Future innovations for Foundry Labs

We’re exploring the next frontiers with Microsoft Research in our Foundry Labs. One exciting invention is Project Amelie, powered by RD Agent from Microsoft Research, an autonomous agent that can build complete machine learning pipelines from a single prompt. Give it a task like “predict customer churn from our dataset,” and Amelie will ingest data, train models, and produce a deployable solution—an experiment in AI developing AI.

We’re also rethinking how AI agents interact with people and each other: Magentic-UI (now open source) provides a visual canvas to prototype multi-agent workflows and human-in-the-loop interactions, and TypeAgent is bringing long-term memory to agents, enabling them to retain and recall knowledge over extended periods. In the scientific realm, new AI models like EvoDiff (for generating novel proteins) and BioEmu (for predicting how proteins change shape) are being tested in Azure AI Foundry to accelerate research in biology. These forward-looking projects show how Azure AI Foundry is continually innovating—so you’ll always have access to the latest breakthroughs in AI.

The path forward with Azure AI Foundry

Join us at Microsoft Build 2025 to see these new capabilities in action and learn how they can transform your business. We’re excited to work with you—our community of developers and customers—to shape this new era of AI. Together, we’re making AI more accessible, powerful, and trustworthy for everyone. Learn more and get started with Azure AI Foundry.

Resources:

- Review Azure AI Foundry documentation.

- Take the Azure AI Foundry Learn Course.

- Download the Azure AI Foundry SDK.

- Chat with us on Discord.

- Provide feedback on GitHub.

The post Azure AI Foundry: Your AI App and agent factory appeared first on Microsoft Azure Blog.